Your AI-Built MVP Works. Now What?

Three things to add before you hire your first engineer

You built your product in 6 weeks using AI tools. It works. A few customers are paying. Vibe coding feels real.

Then during your first VC pitch they ask how you track your sales pipeline. Your tech co-founder wants to know if customers are actually using the feature you shipped last month. You don't have good answers. Not because you don't care. Because the product was built to work, not to be watched.

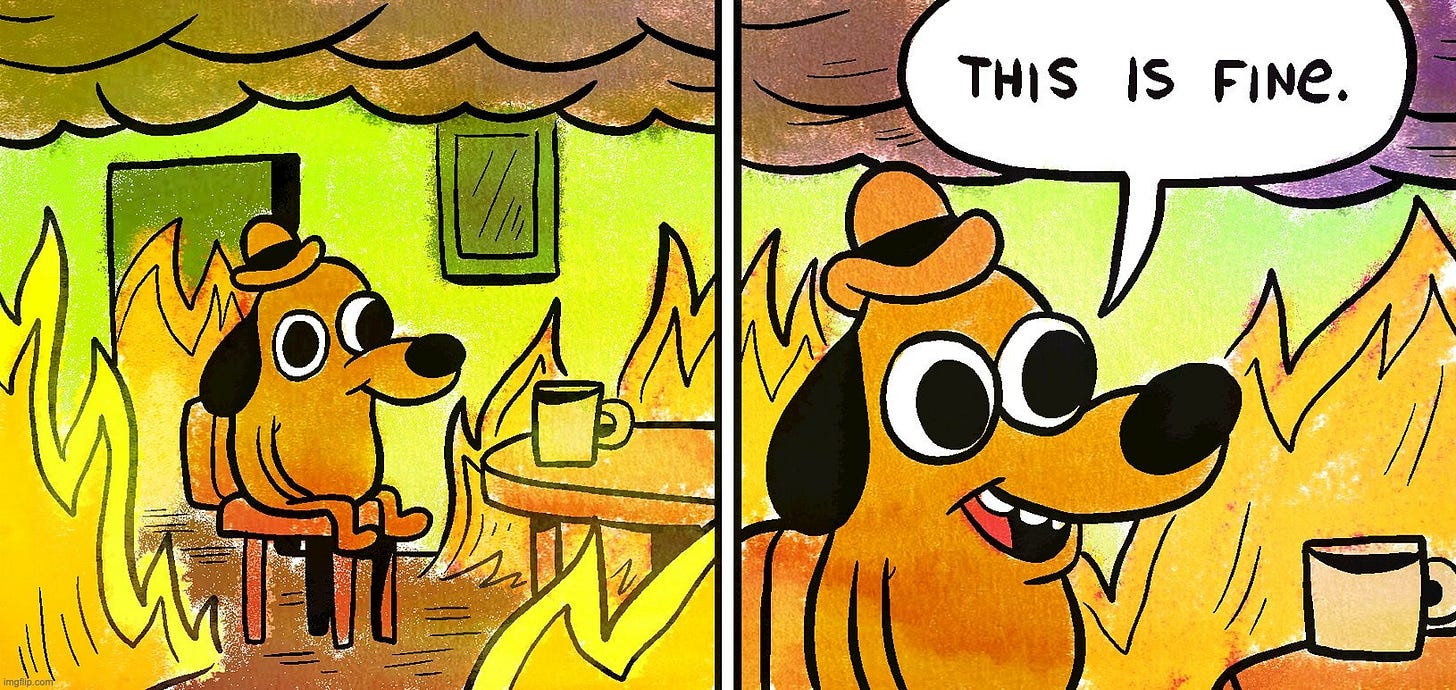

This is where most AI-built products hit a wall. Not because they're bad. They usually solve a real problem and users are happy. The issue is that the code that helped you move fast skipped the layer that tells you what's happening inside your own product.

I've seen this a few times in the past year. A founder builds with Lovable or Claude. The product looks solid. Customers are onboarding. Revenue is coming in. Then a VC asks a straight question about usage or retention, and the founder spends the weekend digging through a database to find the answer.

What's underneath is usually the same three gaps.

No analytics layer, so you can't tell which features get used.

No dashboard, so every business question needs a manual database query.

No monitoring, so you find out about outages from a customer email.

None of that is a mistake. Speed was the goal. AI tools are good at building features. They're not good at building the layer around those features. Nobody prompted Lovable to set up error tracking. You were shipping. That was the right call.

The problem starts when the business outgrows the code. When investors need numbers you can't produce. When an enterprise prospect asks about data residency and you have to check. When your first engineer opens the repo, reads for ten minutes, and the energy in the room changes.

At that point, rewriting everything is rarely the answer. It burns time and kills momentum. The better question is: what needs to exist before someone else starts making changes?

In practice, three things.

Visibility into usage. You need to know what customers are doing inside your product. Not vanity metrics. Basic things. Active users last week. Which features get used daily. Where people drop off. Without this, every product decision is a guess. PostHog or Hotjar gets you there fast.

Deployment that doesn't depend on you. If releasing a new version relies on your laptop and steps you remember from habit, that's a risk. A simple CI/CD setup that runs basic checks and deploys the same way every time removes you as the single point of failure. Add basic error tracking so you find out when something breaks before your customers do.

A codebase someone else can read. When your first engineer joins, can they understand how it fits together without you in the room? A clear README explaining how to run the project, how it's structured, and how to deploy it goes a long way. Most AI-built projects skip this because the founder never needed it.

When those three things are in place, the code doesn't need to be perfect. It needs to be legible, stable, and observable enough for the business to run on it.

The first phase was about proving the idea. The next phase is about making the product something other people can trust and build on. Those are different jobs.

If you're in that transition and want a second pair of eyes on what to fix first, book a free 20-minute call.